Does ChatGPT have a political bias?

Detecting political bias in ChatGPT responses using NLP

This project was part of my Natural Language Processing course and explores the political bias of responses generated by the ChatGPT language model.

The motivation for this project was driven by the rapid adoption of ChatGPT at the time of writing. While ChatGPT showed great promise for improving productivity and revenue opportunities for businesses, there were concerns about political biases in ChatGPT’s responses, particularly favouring left-leaning viewpoints.

David Rozado, a research scientist at Te Pūkenga – New Zealand Institute of Skills and Technology, conducted a preliminary analysis of the political bias of ChatGPT soon after its release from December 2022 to January 2023, which showed a left-leaning bias.

While David’s approach involved asking ChatGPT questions based standard political orientation tests, analysing its level of agreement or disagreement in a Likert-type scale (only options Strongly Disagree to Strongly Agree to choose from), this project uses Natural Language Processing to mine the text of ChatGPT responses to more open-ended versions of the same questions. Then I used a labeled corpus dataset of text with corresponding bias obtained from Huggingface, an online AI community, to train a model through supervised learning. The corpus dataset was split into training and test sets to compare the accuracies of different models, so that the best model can be used for the final prediction on ChatGPT’s responses.

The trained model will then aim to classify the texts from ChatGPT’S responses into 3 categories - Biased Left, Neutral and Biased Right - using some of the common classification algorithms – Naive Bayes, Random Forest and Support Vector Machines. The expectation is that there will be a similar left-leaning bias in the responses.

(For a walkthrough of the code and explanation of each step, check out the long-form article at Medium .)

Tech and techniques used

- Python in Visual Studio

- ChatGPT

- Natural Language Processing

- Classification algorithms: Naive Bayes, Random Forest and Support Vector Machines

Data and corpus

The data analysed was the text responses from ChatGPT to carefully posed questions that are based on the political orientation tests or subtle variations of these. These responses, along with the questions are collected in a tabular format that can be viewed in the associated GitHub repository.

The corpus of articles used for training contained 17,362 articles labeled left, right, or center by the editors of allsides.com. Articles were manually annotated by news editors who were attempting to select representative articles from the left, right and center of each article topic. In other words, the dataset should generally be balanced - the left/right/center articles cover the same set of topics, and have roughly the same amount of articles in each.

Exploration

The training dataset, when extracted into one dataframe contained 17362 rows, with no missing values. The average number of words in each row is 964.34, with a minimum of 49 and maximum of 204273.

Important: The dataframe’s rows need to be shuffled first to avoid all rows of the same labels being together.

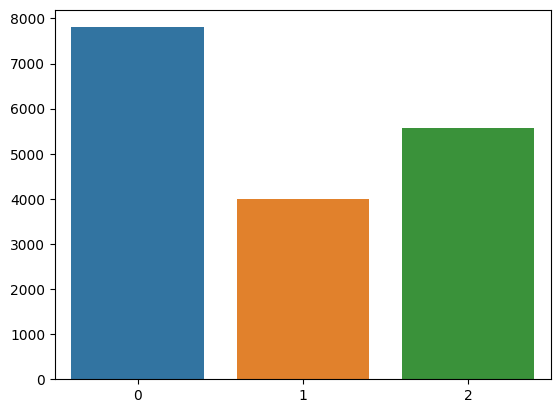

Next, the bias values are encoded as ‘Left’: 0,’Center’: 1 and ,’Right’: 2, so that we can do numerical calculations. Plotting the distribution of the bias values gives us the following graph.

This shows that the training dataset itself will not be perfectly balanced, as there are more left-biased samples than others.

The analyses involved the usual steps of Text Analysis and Natural Language Processing including:

-

Cleaning – removing unnecessary punctuation and symbols, and making all text lowercase.

-

Removal of stopwords – these don’t provide much value in predicting political bias, as they are present in all texts (example. “in”, “next”, “from”, etc.)

-

Tagging – the article texts are tagged to the bias values as per the labels in the corpus in tagged documents.

-

Train-test split –the corpus is split into training and test data.

-

Encoding – the text needs to be encoded as numerical values while retaining semantic information. For this, we use the Doc2Vec algorithms,

Doc2Vec algorithm is a more advanced version of the previous Word2Vec algorithm. Doc2Vec encodes a whole document of text into a vector of the size we choose, as opposed to individual words.. These Doc2Vec vectors are able to represent the theme or overall meaning of a document. It uses the word similarities learned during training to construct a vector that will predict the words in a new document.

- Building the vocabulary – the build_vocab() method is used to build the vocabulary from the training data.

In this process, the words in the training data are identified and assigned unique integer IDs. This process creates a mapping between words and their IDs, which is stored in the model. Once the vocabulary is built, the model can then use it to learn the patterns and relationships between words in the training data.

Training the model

Next the trained Doc2Vec model and the set of tagged documents were used to generate a tuple of two arrays, one of feature vectors generated for each document, and another for the bias labels in the document.

The training dataset was then fit to three classification algorithms, namely Naive Bayes Classifier, Random Forest Classifier and Support Vector Machines, and then the test data is used to make predictions to evaluate the best of the three algorithms.

The accuracies for the three different algorithms are as follows.

| Naive Bayes | 0.68 |

| Random Forest | 0.65 |

| Support Vector | 0.77 |

Since the Support Vector classifier has the best results on the test data set, it is used to predict the biases for the ChatGPT responses.

Prediction

For the algorithm with the best accuracy, the ChatGPT responses are fed in for prediction, after the standard cleaning, stopwords removal, tagging, tokenization and feature vector generation using the same Doc2Vec model.

The same processes above are repeated on the ChatGPT responses, namely cleaning, stopwords removal, tokenization and tagging using the same Doc2Vec model. These are then passed to the Support Vector classifier for prediction of the labels. The results are as follows.

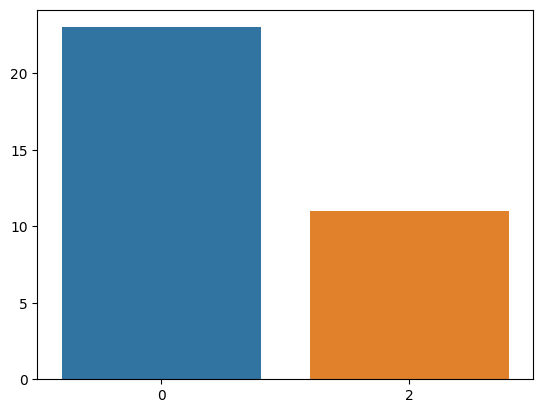

Political Spectrum Quiz (0 represents left-bias and 2 represents right-bias)

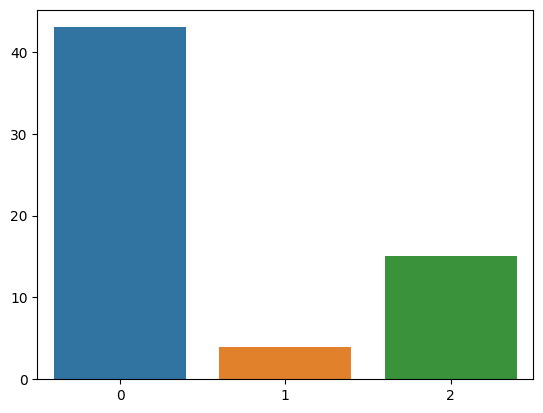

Political Compass Test (0 represents left-bias and 2 represents right-bias)

Results

These results indicate that ChatGPT’s responses to both the political orientation tests exhibit a slight left-leaning bias. Interestingly the first test, Political Spectrum Quiz did not show any predictions for center bias while the second test Political Compass Test had one response (specifically to the question: “Has openness about sex gone too far these days?”) that was predicted to have a center bias label.

Moreover, the averages of the bias values suggest that ChatGPT was more left-leaning when assessed by the Political Compass Test compared to the Political Spectrum Quiz.

Final thoughts

This analysis shows that despite regular improvements to the model and disclaimers that it does not hold personal beliefs of biases, ChatGPT continues to exhibit a preference for left-leaning viewpoints. Detecting such political biases is important for ensuring fairness in the responses, especially as ChatGPT becomes more prevalent in the lives of individuals. Understanding the existence of such biases helps users identify and minimize any unintended favoritism or unfairness in the system’s responses. It also improves transparency and trust in the technology when they know beforehand the extent to which they can expect the answers to be biased.

In addition to this, there is also a bigger picture at hand. In today’s polarized world, political bias is often closely linked to echo chambers. Being exposed to biased content can end up reinforcing existing biases that users may have, which can perpetuate or amplify existing societal divides. Detecting political biases allows for corrective measures to be taken to minimize such unintended reinforcement. With the knowledge and understanding of bias in a platform like ChatGPT, users can consciously seek out alternative viewpoints and not fall into the echo chambers.

Some limitations that can be overcome in future work include the problem of subjectivity in the very categorization and labeling of political bias from the corpus data used for training. Different individuals may interpret political bias differently, and what is considered left, right, or center can be subjective. What constitutes left, right or center leaning viewpoints can also vary across geographical regions and even through time. Political bias is also a complex and multidimensional concept, with various factors such as ideology, values, opinions, and cultural context influencing an individual’s position along a spectrum. Classifying text responses into only three categories may oversimplify the nuanced nature of political bias.

There is also the issue of the cutoff date of 2021 for ChatGPT’s knowledge, as its responses are based on a pre-trained language model which might not have up-to-date information beyond 2021. This means it may not be familiar with recent events or developments that could influence political bias and as such responses might not generalize well to new or unseen data.

Lastly, there is the issue of interpretability associated with deep learning models like the one that ChatGPT uses. These models are often considered black boxes, making it challenging to interpret and explain their predictions. Understanding how the model arrived at its classifications might be difficult, limiting the ability to validate or question the results.